Introduction

During my BFA in Design and Computation Arts, I had the chance to work as a Research Assistant in a research-creation project funded by the Canada Foundation for Innovation, through Hexagram at Concordia University and Université de Montréal. The project was led by two researcher, Martin Racine and Philippe Lalande. I joined the project in 2013, as a graphic designer, to produce the visual identity as well as various design material for the project. However, I quickly became involved in the project itself and would become an ongoing contibutors member of the team until the project’s completion in 2016. My experience in this project fostered an interest for graduate studies, as well as research oriented careers. In this case study, I will outline the various paths we designed in this research project that, for simplicity, we will call DNA.

Summer 2013 was a very interesting period. We just received our funding and we were figuring out how we could develop our project further. I remember that we had a three to four years window, so we had a lot of time before us. A research-creation project is a particularly organic methodology. As such, our goal was to design and think about the future life of objects. We always had the hypothesis that the objects that surround us every day contained a lot of information about themselves and data about our behavior. We also begun to think that these objects could become autonomous entities, have their own identities, emotions and stories, but that they could also become active participants along with humans, as well as between themselves. We had a lot of ideas and questions, but few answers or solutions.

During the following fall and winter semesters, the project begun to shape itself. We created the manifesto for the Future Life of Object, and thus the project was born. Our goal was to produce an exhibition, where visitors would be able to experiment each of the six manifesto declarations. Every declaration started with the same phrase: In the future, objects will:

- Reveal their anatomy

- Declare their impacts

- Tell their story

- Express their emotions

- Care for their descendants

- Communicate with each other

Now, we were really getting going. Until that point, I was assigned with the production of graphic material. I designed the visual identity, the manifesto. I even started designing our website. As we transitioned into building an interactive exhibition, I became more involved as a researcher. In this case study, I will be detailing three major directions we designed with the team. Most of these experiments were done with close collaboration of Hugues Rivest, another researcher with whom I spent most of my days designing these experiments. We really had fun together and that’s why I believe we had such a success in the long run. We weren't alone at all, in fact Alexandre Joyce produced very much all of the data we were able to visualize and was the driving force behind the entire project. Elio Bidinost, a true wizard, whose experience with electronics and computer engineering gave us a hell of a jump start. Finally, Martin and Philippe L. were our mentors and leaders through all of our crazy contraptions, ideas and theories we could come up with.

The three directions we’ll be exploring in this case study are computer vision, Arduino with Unity as well as Arduino with sound. They are related to four of the six manifesto declarations — Reveal their anatomy; Declare their impacts; Express their emotions; Communicate with each other.

Computer Vision

We were at first very interested in the concept of interactive surfaces. We had seen demos of Reactivision’s software used as a modular synthesiser and sought to use this idea to design interactive exhibition modules. At the time, this idea would let us adapt the same technology towards different exhibition modules; the different manifesto declarations.

Our setup for the time being was using TUIO for Processing, from which we used an IR laser diode to point at a camera, which would in return control a pointer around the screen. We would use a redirected beam from a conventional projector, pointed at a translucent surface. This would create a backlit surface perfect for manipulations and interactions.

In the first experiment, we wanted to make people aware of the environmental impact of objects. As such, the associated manifesto declaration stated that objects would declare their impacts. The object for this experiment, was an articulated lamp. It made the interaction meaningful, while adding a playful twist: as the user would manipulate the lamp, it would light up a surface, while declaring its impacts.

The first iteration was exactly that, I had drawn a schematized map of the path an object travelled from its extraction and manufacturing phases to its end of life, including transport and use. We found out that while the interaction was fun, the information transfer was poor, where data would be lost unless lit up by the user.

The second iteration aimed at making things a bit clearer. On the surface, we had a screen containing information about our lamp, divided in 5 phases. We would learn, by hovering the lamp around, that most of its environmental impact would come from its use. This was working quite well. We were learning, but not learning enough, or perhaps not grasping the right message. We found out that we were more interested in fostering an emotional response to this data. We had to expand our communication strategy.

From this point, we moved away from the IR pointer. This decision was fuelled by the desire to diversify the objects we could use, and to identify them correctly as we positioned them on a surface. Luckily, we had what we needed with TUIO. As part of Reactivision, the software supported tracking of small images called fiducials. The software was able to see these images and translate them to screen coordinates, along with their respective rotational orientation in one axis.

In order to fuel emotional response, we wanted to give a voice to the object. In the end, they were meant to declare their impact, and we, human users, were not to look too hard for them. This was the general idea. Sound was an apparent solution. We designed two additional iterations that helped objects obtain a voice of their own. In the third iteration, we associated different objects to a unique sound signature. The idea was that the environmental impact data would be displayed on screen, as well as conveyed through their sound signature. We found out that the melodies generated by this experiment were very powerful, and we became more aware of the different impacts associated to objects.

The fourth iteration concerned the last manifesto declaration — in the future, objects will communicate with each other. We would see small particles emitting form the objects. Once objects were close enough, they would form a connection, and their particles were attracted to each other. Once a particle would reach another object, it would emit a small sound and die, completing the communication process. In this experiment, we found out that communication patterns can be very complex. We found out that groups of four objects would behave differently than groups of two objects. We found out that the proximity and the arrangement of objects in space would influence their communications. In summary, we found that patterns in communication were unpredictable, and very similar to communication amongst humans. This is something that we found very powerful.

We also looked into different tracking technologies such as TrackingJs, a library that uses an in-browser webcam to track colors in a live video feed. Using this technology, we were able to simulate a tomographic view of a flashlight that we would hold up to the camera. This iteration concerned the first manifesto declaration: In the future, objects will reveal their anatomy. We found out that we are largely unaware of the complexity of the objects that surround us, and that by revealing their internal structure, we would gain a better understanding of these objects.

Sadly, for these last three iterations, we were unable to refine them into exhibition units, as they were very complex and unstable, thus prone to cause problem in the future. Furthermore, we became aware that we needed comparable objects, which we did not, that would convey differences across similar objects. We found out with these visualizations that most of the environmental impact was coming from the use of the objects, and we wanted to find objects that had more contrasted environmental impacts.

Arduino + Unity

During the fall and winter semester, we took time to analyse our progress so far. With six exhibition modules to produce and only half of them were being designed. We quickly realized that our universal approach would not benefit our exhibition, as the technology we designed was more or less applicable to other manifesto declarations and was difficult to replicate throughout six exhibition modules for financial and technical reasons. We needed to find other ways to produce interactive experiments for the users.

We initially looked at different types of sensors. Mainly accelerometers and gyroscopes that would translate the manipulation of objects. We were very keen to keep the tactile and physical dimension between the users and our exhibition modules. We were after all speculating about tangible objects. This experiment made the use of Arduino, coupled with the Unity Game Engine. Through the serial port, we were able to design unique controller input from very much any sensors that we could think of. It was after all a very simple process. In addition, the power of Unity gave us more than Processing ever gave to us in the previous experiment.

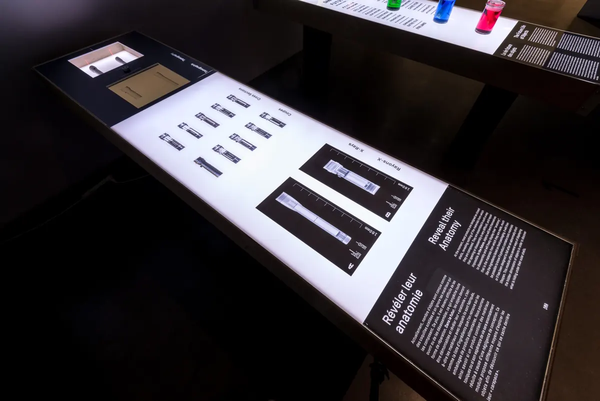

In our first iteration, we transformed our work that had been previously done with tomography into a comparative anatomy experiment. We were inspired to use a “laser” to scan the objects from left to right. The laser would be controlled by a potentiometer. The data from the potentiometer would be given to Unity, while its rotational movement would also mechanically be translated to a linear movement across the physical objects. In the end, the position of the laser would simulate tomographic imagery on a screen. We found this experiment to be very successful and effective for revealing the anatomy of an object, as well as to compare two comparable objects. This iteration would become part of one exhibition module.

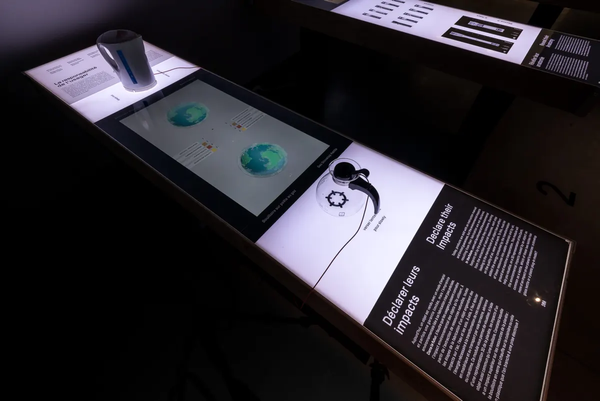

Our second iteration made use of gyroscope sensor boards. We used the gyroscopes to determine the orientation of a particular object. At first we tried to translate the physical movement into Unity’s 3D environment. However, we found out that this type of movement could induce motion sickness in some people because it was often out of sync and jittery. Instead, we focused on a particular expected movement of the object. For this reason, we used two different kettles. The expected movement was to pour liquid out of the kettle. This particular movement would trigger an animation revealing the impacts of the associated kettle. Much like the IR lamp, we found out that the expected manipulation of an object made the experiment much more comprehensible for the user. Also, we found out that the simplicity of the movement was beneficial. As for the interface, we wanted to convey a sense of impact on the planet. This is why we built a globe, where smog would appear, where water would become polluted, smog would appear around the largest cities and resource and land would become unusable. We were more interested in conveying the differences between the two objects — one electric kettle powered by hydroelectricity versus one normal kettle on a gas stovetop. As such, we see that the two have different impacts on the planet. We found out that this interaction was very captivating and engaged the user in a very meaningful experience. Yet it did not convey any complex data.

Arduino + sound

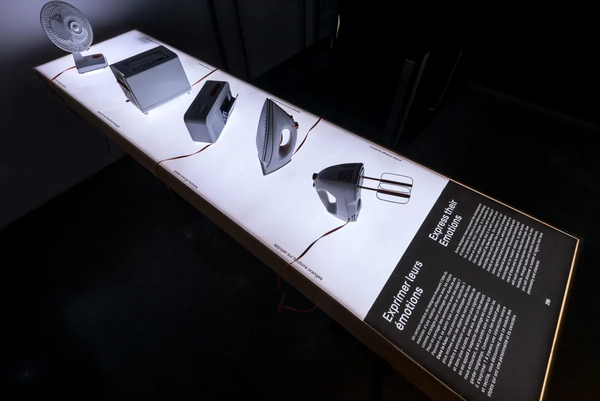

In my last experiment, I led the design of five objects for the manifesto declaration: In the future, objects will express their emotions. We had found out earlier that giving a voice to objects could facilitate care for them. The objective was then to give personalities to five objects, were their manipulation would trigger reactions from them. Sounds were produced to match a certain personality and certain emotions. All objects were unique, and this is what we needed to convey. It was also important to retain the external integrity of the objects. For this reason, I retrofitted the objects with different analog and digital inputs such as buttons and potentiometers that were controlled by an Arduino, then translated into sounds by an external sound card. The sounds were coming out of the objects from an internal speaker. We found out, as expected, that this experience triggered a lot of surprise form the user. This confirmed the hypothesis that if objects had emotions, they would not be as boring, and we wouldn't feel the urge to replace them at the slightest inconvenience.

Conclusion

These three experiments with computer vision, Arduino, Unity and sound have shown how we can stimulate interest in everyday objects through meaningful interactions. We knew that objects contained hidden information, that they could have feelings, emotions, and that we knew almost nothing about them, yet used them every day. This case studied has shown how simple interaction can translate into complex behavioral patterns and reveal things that we are mostly unaware of. As a researcher, I believe that the iterative methodology that we designed through this research-creation project enabled discoveries about how we behave with objects, but also how we can communicate these complex notions to users.